Vector 调研测试同步至 ElasticSearch

Contents

前言

继续接着《Vector 调研测试之环境安装》,本文旨在记录对Vector写入Elasticsearch的功能性测试是否能够满足广泛的需求,以期能够为他人提供有用的参考和帮助。

准确工作

-

新建 Kafka Topic

1kafka-topics -–create -–bootstrap-server kafka-1:9092 -–replication-factor 1 -–partitions 6 -–topic bigdata-data-v1 -

查看 Kafka Topic

1kafka-topics --list --bootstrap-server kafka-1:9092 -

测试数据

-

测试数据来源

https://www.kaggle.com/datasets/eliasdabbas/web-server-access-logs

-

测试数据Demo

1 2 3 4 5 654.36.149.41 - - [22/Jan/2019:03:56:14 +0330] "GET /filter/27|13%20%D9%85%DA%AF%D8%A7%D9%BE%DB%8C%DA%A9%D8%B3%D9%84,27|%DA%A9%D9%85%D8%AA%D8%B1%20%D8%A7%D8%B2%205%20%D9%85%DA%AF%D8%A7%D9%BE%DB%8C%DA%A9%D8%B3%D9%84,p53 HTTP/1.1" 200 30577 "-" "Mozilla/5.0 (compatible; AhrefsBot/6.1; +http://ahrefs.com/robot/)" "-" 31.56.96.51 - - [22/Jan/2019:03:56:16 +0330] "GET /image/60844/productModel/200x200 HTTP/1.1" 200 5667 "https://www.zanbil.ir/m/filter/b113" "Mozilla/5.0 (Linux; Android 6.0; ALE-L21 Build/HuaweiALE-L21) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.158 Mobile Safari/537.36" "-" 31.56.96.51 - - [22/Jan/2019:03:56:16 +0330] "GET /image/61474/productModel/200x200 HTTP/1.1" 200 5379 "https://www.zanbil.ir/m/filter/b113" "Mozilla/5.0 (Linux; Android 6.0; ALE-L21 Build/HuaweiALE-L21) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.158 Mobile Safari/537.36" "-" 40.77.167.129 - - [22/Jan/2019:03:56:17 +0330] "GET /image/14925/productModel/100x100 HTTP/1.1" 200 1696 "-" "Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)" "-" 91.99.72.15 - - [22/Jan/2019:03:56:17 +0330] "GET /product/31893/62100/%D8%B3%D8%B4%D9%88%D8%A7%D8%B1-%D8%AE%D8%A7%D9%86%DA%AF%DB%8C-%D9%BE%D8%B1%D9%86%D8%B3%D9%84%DB%8C-%D9%85%D8%AF%D9%84-PR257AT HTTP/1.1" 200 41483 "-" "Mozilla/5.0 (Windows NT 6.2; Win64; x64; rv:16.0)Gecko/16.0 Firefox/16.0" "-" 40.77.167.129 - - [22/Jan/2019:03:56:17 +0330] "GET /image/23488/productModel/150x150 HTTP/1.1" 200 2654 "-" "Mozilla/5.0 (compatible; bingbot/2.0; +http://www.bing.com/bingbot.htm)" "-"

-

-

写入数据

1kafka-console-producer --broker-list kafka-1:9092 --topic bigdata-data-v1 < ./access.log -

消费数据查看

1kafka-console-consumer --bootstrap-server kakfa-1:9092 --topic bigdata-data-v1 --from-beginning

写入ES

-

编写 Vector 的配置文件

vim /opt/vector/config/es.toml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24[api] enabled = true address = "0.0.0.0:8686" [sources.src_kafka] type = "kafka" bootstrap_servers = "192.168.31.37:9092" auto_offset_reset = "earliest" group_id = "consumer-bigdata-es" topics = ["bigdata-data-v1"] decoding.codec = "bytes" [transforms.transform_log] type = "remap" inputs = ["src_kafka"] source = """ . = parse_nginx_log!(string!(.message), "combined") .date, err = format_timestamp(.timestamp, "%Y-%m-%d") """ [sinks.sink_out] inputs = ["transform_log"] type = "console" encoding.codec = "json" -

运行测试

1/opt/vector/bin/vector -c /opt/vector/config/es.toml在 console 查看输出的格式是否正确

-

在ES上创建模板

PUT /_template/access-log_template

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39{ "template":"access-log_*", "settings":{ "number_of_shards":12 }, "mappings":{ "properties":{ "agent":{ "type":"text" }, "client":{ "type":"text" }, "date":{ "type":"text" }, "referer":{ "type":"text" }, "request":{ "type":"text" }, "size":{ "type":"integer" }, "status":{ "type":"integer" }, "timestamp":{ "type":"text" } } }, "aliases":{ "access-log":{ } } } -

编写配置文件设置保存到ES

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28[api] enabled = true address = "0.0.0.0:8686" [sources.src_kafka] type = "kafka" bootstrap_servers = "192.168.31.37:9092" auto_offset_reset = "beginning" group_id = "consumer-bigdata-es" topics = ["bigdata-data-v1"] decoding.codec = "bytes" [transforms.transform_log] type = "remap" inputs = ["src_kafka"] source = """ . = parse_nginx_log!(string!(.message), "combined") .date, err = format_timestamp(.timestamp, "%Y-%m-%d") """ [sinks.sink_es] type = "elasticsearch" inputs = ["transform_log"] endpoints = ["http://192.168.31.37:9200"] api_version = "v7" id_key = "_id" mode = "bulk" bulk.index = "access-log_{{ date }}" -

运行

1/opt/vector/bin/vector -c /opt/vector/config/es.toml -

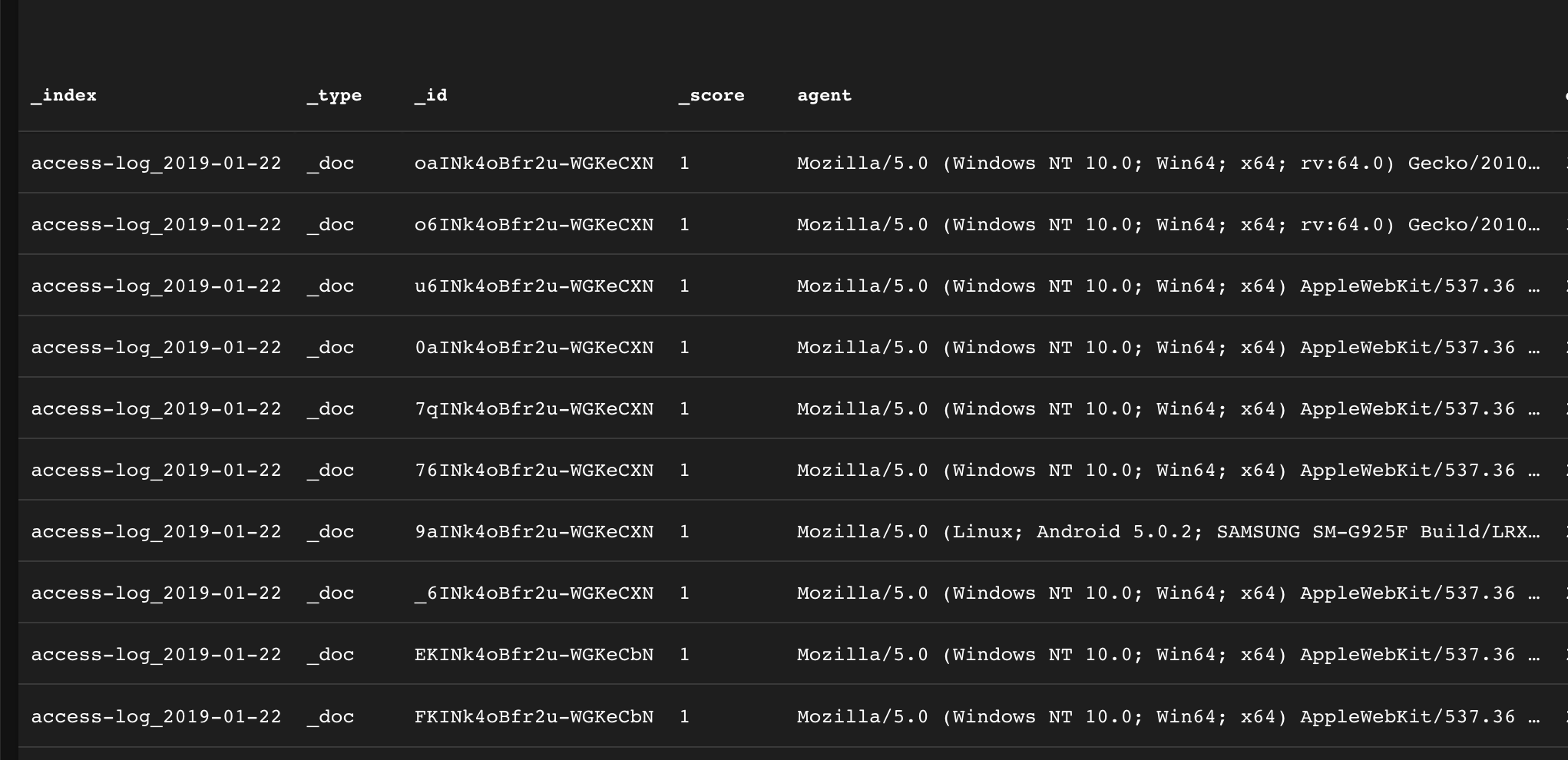

查看数据是否正常写入

如图所示,索引和别名都成功创建。

如图所示,索引和别名都成功创建。 抽查索引的数据,发现也是正常的。

抽查索引的数据,发现也是正常的。

结论

经过部署测试,Vector 用 VRL 解析转换再写入 ElasticSearch 在功能上是完全OK的。

Hwsdien

Hwsdien